Published by TringTring.AI Team | Technical Deep Dive | 12 minute read

The artificial intelligence revolution has transformed how businesses communicate with customers. Among the most sophisticated developments is the emergence of AI voice agents – intelligent systems capable of conducting natural, human-like conversations at scale. But how exactly do these systems work behind the scenes?

This comprehensive technical guide explores the intricate architecture, technologies, and processes that power modern AI voice agents. Whether you’re a technical leader evaluating voice AI solutions or a developer seeking to understand the underlying technology, this deep-dive will provide you with the knowledge you need.

Introduction to AI Voice Agent Architecture

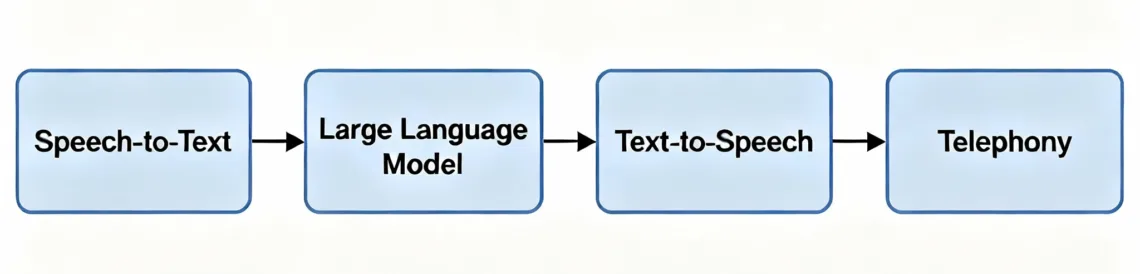

AI voice agents represent a convergence of multiple advanced technologies working in harmony. Unlike traditional Interactive Voice Response (IVR) systems that follow rigid decision trees, modern AI voice agents leverage machine learning, natural language processing, and sophisticated orchestration platforms to deliver truly conversational experiences.

Key Capabilities of Modern AI Voice Agents:

- Natural speech recognition and synthesis

- Contextual understanding and memory retention

- Real-time decision making and response generation

- Multi-language and accent support

- Integration with business systems and databases

- Omnichannel conversation continuity

The technology stack behind these capabilities is both complex and elegantly orchestrated, involving multiple specialized components that work together seamlessly.

Core Components and Technologies

Speech-to-Text (STT) Engines

The first critical component in any voice AI system is the Speech-to-Text engine, which converts spoken audio into machine-readable text. Modern AI voice platforms support multiple STT providers to optimize for different use cases:

Popular STT Technologies:

- OpenAI Whisper: State-of-the-art accuracy with multilingual support

- Google Speech-to-Text: Robust cloud-based recognition with real-time streaming

- AWS Transcribe: Enterprise-grade recognition with custom vocabulary support

- Deepgram: Low-latency recognition optimized for conversational AI

- Azure Cognitive Services: Microsoft’s comprehensive speech recognition suite

Technical Considerations:

- Latency Requirements: Real-time conversations require sub-200ms STT processing

- Accuracy vs Speed Trade-offs: More accurate models may introduce additional latency

- Language and Dialect Support: Global deployments need robust multilingual capabilities

- Noise Handling: Effective background noise suppression for real-world environments

Large Language Models (LLMs)

The “brain” of any AI voice agent is the Large Language Model that processes the transcribed text and generates intelligent responses. Enterprise voice platforms typically offer multiple LLM options to balance cost, performance, and capabilities:

Enterprise LLM Options:

- OpenAI GPT-4/GPT-4o: Exceptional reasoning and broad knowledge base

- Anthropic Claude: Safety-focused with superior handling of long conversations

- Google Vertex AI/Gemini: Native multimodal capabilities and Google services integration

- Azure OpenAI: Enterprise-grade deployment with Microsoft security guarantees

- Open-Source Models: Ollama, Qwen for on-premises deployments

LLM Selection Criteria:

- Context Window Size: Ability to maintain conversation history (128K-2M tokens)

- Response Quality: Accuracy, relevance, and human-like naturalness

- Specialized Training: Domain-specific knowledge and industry terminology

- Cost Efficiency: Token-based pricing models and usage optimization

- Latency Performance: Response generation speed for real-time conversations

Text-to-Speech (TTS) Synthesis

Converting the LLM’s text response back to natural-sounding speech is the final step in the voice processing chain. Modern TTS engines offer remarkable voice quality and customization options:

Leading TTS Technologies:

- ElevenLabs: Ultra-realistic voice cloning and emotion control

- OpenAI TTS: High-quality synthesis with multiple voice options

- AWS Polly: Enterprise-grade with SSML support for pronunciation control

- Google Text-to-Speech: WaveNet technology for natural-sounding voices

- Azure Cognitive Services: Neural voices with custom voice creation

- Cartesia: Optimized for low-latency conversational applications

Advanced TTS Features:

- Voice Cloning: Creating custom brand voices from audio samples

- Emotion Control: Adjusting tone, pace, and emotional expression

- SSML Support: Fine-grained pronunciation and prosody control

- Multi-language Synthesis: Seamless switching between languages mid-conversation

- Real-time Optimization: Streaming synthesis to minimize latency

The Voice Processing Pipeline

Understanding the step-by-step process of how voice AI systems handle conversations is crucial for optimizing performance and troubleshooting issues.

Step 1: Audio Capture and Preprocessing

The journey begins when audio is captured from the caller through telephony infrastructure:

Audio Input → Noise Reduction → Format Conversion → Stream BufferingTechnical Details:

- Audio Formats: Typically 16kHz, 16-bit PCM or 8kHz mu-law for telephony

- Noise Reduction: Digital signal processing to improve recognition accuracy

- Voice Activity Detection (VAD): Identifying speech vs silence to optimize processing

- Buffer Management: Balancing latency with recognition accuracy

Step 2: Speech Recognition Processing

The preprocessed audio is sent to the STT engine for transcription:

Audio Stream → STT Engine → Confidence Scoring → Text OutputOptimization Strategies:

- Streaming Recognition: Processing audio in real-time chunks rather than waiting for completion

- Confidence Thresholds: Filtering low-confidence transcriptions to prevent errors

- Custom Vocabularies: Training on domain-specific terminology for improved accuracy

- Speaker Adaptation: Adjusting recognition models for individual speakers

Step 3: Natural Language Processing

The transcribed text undergoes sophisticated processing to extract meaning and intent:

Raw Text → Intent Classification → Entity Extraction → Context Integration → Response PlanningKey NLP Components:

- Intent Recognition: Determining what the caller wants to accomplish

- Named Entity Recognition (NER): Extracting important information like dates, names, numbers

- Sentiment Analysis: Understanding emotional state and adjusting responses accordingly

- Context Management: Maintaining conversation state and history across multiple turns

Step 4: Response Generation

The LLM generates an appropriate response based on the processed input and conversation context:

Processed Input + Context → LLM Processing → Response Generation → Output FormattingResponse Optimization:

- Prompt Engineering: Crafting effective system prompts for consistent behavior

- Temperature Control: Balancing creativity with predictability in responses

- Token Management: Optimizing context windows for long conversations

- Response Filtering: Ensuring outputs meet safety and brand guidelines

Step 5: Speech Synthesis

The generated text response is converted back to natural-sounding speech:

Response Text → SSML Processing → TTS Engine → Audio Generation → Telephony OutputSynthesis Optimization:

- Streaming TTS: Beginning playback while synthesis continues to reduce perceived latency

- Voice Consistency: Maintaining the same voice characteristics throughout conversations

- Prosody Control: Adjusting emphasis, pauses, and intonation for natural delivery

- Audio Quality: Ensuring consistent volume and clarity across different telephony systems

Language Model Integration

The choice and configuration of language models significantly impacts the performance, cost, and capabilities of AI voice agents. Enterprise deployments require careful consideration of multiple factors:

Model Selection Criteria

Performance Benchmarks:

- Conversational Ability: How well the model maintains coherent dialogue

- Domain Knowledge: Understanding of industry-specific concepts and terminology

- Reasoning Capabilities: Ability to handle complex, multi-step problems

- Safety and Reliability: Consistent, appropriate responses in all scenarios

Operational Considerations:

- Latency Requirements: Response generation time for real-time conversations

- Cost Structure: Token-based pricing and volume discounts

- Availability and Reliability: Uptime guarantees and failover capabilities

- Regional Deployment: Data sovereignty and compliance requirements

Prompt Engineering for Voice Applications

Effective prompt engineering is crucial for consistent AI voice agent performance:

System Prompt Template:

You are a professional customer service representative for [COMPANY].

Your role is to [SPECIFIC_ROLE].

Key behaviors:

- Keep responses conversational and concise (under 100 words)

- Ask one question at a time

- Confirm understanding before proceeding

- Transfer to human agents for [ESCALATION_CRITERIA]

Current conversation context: {CONTEXT}

Customer information: {CUSTOMER_DATA}

Previous conversation summary: {HISTORY}Advanced Prompt Techniques:

- Few-shot Learning: Providing examples of desired responses

- Role-based Instructions: Defining specific personality and behavior patterns

- Context Injection: Dynamically incorporating relevant business data

- Safety Guardrails: Preventing inappropriate or off-brand responses

Multi-Model Architectures

Sophisticated voice AI systems often employ multiple models for different tasks:

Specialized Models:

- Intent Classification Models: Fast, lightweight models for initial request routing

- Entity Extraction Models: Specialized models for extracting structured data

- Safety Models: Dedicated models for content filtering and compliance

- Emotion Detection Models: Analyzing caller sentiment and emotional state

Hybrid Approaches:

- Model Routing: Directing different types of requests to optimal models

- Ensemble Methods: Combining outputs from multiple models for better accuracy

- Fallback Hierarchies: Graceful degradation when primary models are unavailable

Omnichannel Architecture

Modern businesses require AI agents that can maintain consistent conversations across multiple communication channels. This presents unique technical challenges around context synchronization, data management, and user experience consistency.

Context Synchronization Technologies

Model Context Protocol (MCP) Servers:

MCP servers enable AI agents to maintain context across different communication channels, ensuring customers never have to repeat themselves when switching from voice to WhatsApp to email.

Key Features of MCP Implementation:

- Unified Session Management: Single conversation thread across all channels

- State Persistence: Maintaining conversation history and user preferences

- Real-time Synchronization: Instant context updates across channels

- Conflict Resolution: Handling simultaneous interactions on multiple channels

Technical Architecture:

Channel 1 (Voice) ──┐

├── MCP Server ──→ Unified Context Store

Channel 2 (WhatsApp)──┤ ↓

└── Channel N AI Processing EngineChannel-Specific Optimizations

Each communication channel has unique technical requirements and constraints:

Voice Channels:

- Latency Optimization: Sub-500ms response times for natural conversation flow

- Audio Quality: Handling various telephony codecs and network conditions

- Interruption Handling: Managing barge-in and conversational overlaps

- Hold and Transfer: Seamless handoffs to human agents with context preservation

WhatsApp Business API:

- Template Management: Automated template creation and approval workflows

- Media Handling: Processing images, documents, and voice messages

- Business Rules: Compliance with WhatsApp’s messaging policies

- Webhook Processing: Real-time message handling and delivery confirmations

Email Integration:

- Thread Management: Maintaining conversation continuity across email threads

- Rich Content: Handling HTML formatting, attachments, and embedded media

- Delivery Tracking: Managing read receipts and bounce handling

- Anti-spam Compliance: Ensuring deliverability and regulatory compliance

Social Media Channels:

- Platform APIs: Integration with Facebook, Instagram, Twitter, LinkedIn

- Rate Limiting: Managing API quotas and request throttling

- Content Moderation: Automated filtering for public-facing responses

- Analytics Integration: Tracking engagement and conversion metrics

Data Synchronization Challenges

Real-time Consistency:

- Event Ordering: Ensuring chronological consistency across channels

- Conflict Resolution: Handling simultaneous updates from multiple sources

- Cache Invalidation: Maintaining fresh context across distributed systems

- Eventual Consistency: Managing temporary inconsistencies in distributed systems

Performance Optimization:

- Context Caching: Intelligent caching strategies for frequently accessed data

- Lazy Loading: Loading context incrementally to minimize latency

- Background Synchronization: Asynchronous updates to maintain responsiveness

- Compression: Efficient encoding of conversation history and context data

Real-Time Processing and Latency Optimization

For AI voice agents to feel natural and engaging, they must respond with human-like timing. This requires sophisticated optimization at every layer of the technology stack.

Latency Targets and Benchmarks

Industry Benchmarks:

- Total Response Time: Under 1 second from speech end to response start

- STT Latency: Under 200ms for real-time transcription

- LLM Processing: Under 500ms for response generation

- TTS Synthesis: Under 300ms for audio generation

- Network Latency: Under 100ms for telephony and API calls

Latency Breakdown Analysis:

Total Latency = STT + LLM + TTS + Network + Processing Overhead

Example: 150ms + 400ms + 250ms + 80ms + 120ms = 1000msOptimization Strategies

Infrastructure Optimization:

- Edge Computing: Deploying processing closer to users to reduce network latency

- CDN Utilization: Caching static assets and routing optimizations

- Multi-Region Deployment: Strategic placement of compute resources

- Load Balancing: Intelligent request routing to optimal servers

Algorithmic Optimization:

- Streaming Processing: Processing audio and generating responses in parallel

- Predictive Prefetching: Anticipating likely responses to reduce generation time

- Model Optimization: Using smaller, faster models for time-critical components

- Batch Processing: Grouping similar requests for efficient processing

System Architecture Optimization:

- Microservices Architecture: Parallel processing of independent components

- Asynchronous Processing: Non-blocking operations throughout the pipeline

- Connection Pooling: Reusing database and API connections

- Memory Management: Efficient allocation and garbage collection

Monitoring and Performance Measurement

Key Performance Indicators (KPIs):

- 95th Percentile Latency: Ensuring consistent performance for most users

- Error Rates: Tracking failures at each stage of the pipeline

- Availability: Measuring system uptime and reliability

- Resource Utilization: CPU, memory, and network usage patterns

Monitoring Infrastructure:

- Real-time Dashboards: Live monitoring of system performance

- Alerting Systems: Automated notifications for performance degradation

- Distributed Tracing: Following requests through the entire system

- Synthetic Testing: Continuous testing of critical user journeys

Enterprise Deployment Considerations

Enterprise deployments of AI voice agents involve additional complexities around security, compliance, scalability, and integration with existing business systems.

Multi-Cloud and Hybrid Architectures

Cloud Provider Options:

- AWS: Comprehensive AI services with strong enterprise features

- Google Cloud Platform: Advanced AI capabilities and global infrastructure

- Microsoft Azure: Deep integration with Microsoft business tools

- Multi-Cloud Strategies: Avoiding vendor lock-in and optimizing for different regions

Deployment Models:

- Public Cloud: Fully managed services with rapid deployment

- Private Cloud: Dedicated infrastructure for enhanced security and control

- Hybrid Solutions: Combining public and private resources for optimal balance

- On-Premises: Complete control for highly regulated industries

Integration Patterns

CRM Integration:

AI Voice Agent ←→ CRM System

↓ ↓

Customer Data ←→ Conversation History

↓ ↓

Unified Customer ProfileCommon Integration Scenarios:

- Salesforce Integration: Real-time lead updates and opportunity management

- HubSpot Connectivity: Marketing automation and contact management

- Custom CRM Systems: API-based integration for proprietary systems

- ERP Integration: Access to customer orders, billing, and account information

Webhook and API Architecture:

- Real-time Notifications: Instant updates to business systems

- Bidirectional Data Flow: Synchronized information across all systems

- Error Handling: Robust retry mechanisms and failure notifications

- Rate Limiting: Protecting backend systems from excessive API calls

Scalability Planning

Traffic Patterns:

- Peak Load Planning: Handling seasonal spikes and marketing campaigns

- Geographic Distribution: Supporting global operations across time zones

- Concurrent Call Limits: Planning for simultaneous conversation capacity

- Growth Projections: Scalable architecture for business expansion

Auto-scaling Strategies:

- Horizontal Scaling: Adding more server instances during high demand

- Vertical Scaling: Increasing server capacity for compute-intensive operations

- Predictive Scaling: Using historical data to anticipate capacity needs

- Cost Optimization: Balancing performance with infrastructure costs

Security and Compliance

Enterprise AI voice agents handle sensitive customer information and must meet stringent security and regulatory requirements.

Data Protection and Privacy

Data Classification:

- Personal Information: Names, contact details, account numbers

- Sensitive Data: Financial information, health records, confidential business data

- Conversation Content: Audio recordings, transcripts, interaction logs

- Metadata: Call duration, timestamps, system performance metrics

Protection Mechanisms:

- Encryption in Transit: TLS 1.3 for all API communications

- Encryption at Rest: AES-256 for stored data and backups

- Access Controls: Role-based permissions and multi-factor authentication

- Data Minimization: Collecting and retaining only necessary information

Data Residency and Sovereignty:

- Regional Compliance: Ensuring data remains within required geographic boundaries

- Cross-Border Transfers: Managing data flows according to international agreements

- Backup and Recovery: Secure, compliant backup strategies

- Data Retention Policies: Automated deletion according to regulatory requirements

Compliance Frameworks

Industry Standards:

- SOC 2 Type II: Security, availability, and confidentiality controls

- ISO 27001: Information security management systems

- GDPR Compliance: European data protection regulations

- HIPAA: Healthcare information privacy and security

- PCI DSS: Payment card industry data security standards

Implementation Requirements:

- Audit Trails: Comprehensive logging of all system access and modifications

- Data Processing Agreements: Clear contractual frameworks with all vendors

- Privacy Impact Assessments: Regular evaluation of data processing risks

- Breach Notification: Automated systems for rapid incident response

Operational Security

Infrastructure Security:

- Network Segmentation: Isolating AI voice systems from other business systems

- Firewall Configuration: Restricting network access to authorized sources only

- Intrusion Detection: Real-time monitoring for security threats

- Vulnerability Management: Regular security assessments and patch management

Access Management:

- Identity Providers: Integration with enterprise SSO systems

- Privileged Access: Special controls for administrative functions

- Session Management: Automatic logout and session timeout policies

- Audit Logging: Detailed records of all user activities

Performance Monitoring and Analytics

Effective monitoring and analytics are essential for maintaining high-performance AI voice agents and continuously improving customer experiences.

Real-Time Monitoring

System Health Metrics:

- Response Times: End-to-end latency for voice processing pipeline

- Error Rates: Failure rates at each component (STT, LLM, TTS)

- Availability: Uptime measurements for all critical services

- Resource Utilization: CPU, memory, and network usage across infrastructure

Conversation Quality Metrics:

- Intent Recognition Accuracy: How well the system understands user requests

- Response Relevance: Quality and appropriateness of AI-generated responses

- Conversation Completion Rates: Percentage of successful interactions

- User Satisfaction Scores: Direct feedback from conversation participants

Analytics and Business Intelligence

Conversation Analytics:

- Topic Analysis: Understanding what customers are calling about

- Sentiment Trends: Tracking customer satisfaction over time

- Agent Performance: Comparing AI and human agent effectiveness

- Channel Preferences: Understanding how customers prefer to communicate

Business Impact Metrics:

- Cost Reduction: Savings from automating customer interactions

- Revenue Impact: Increased sales and customer retention from AI agents

- Operational Efficiency: Reduction in call center staffing requirements

- Customer Experience Improvements: Faster resolution times and higher satisfaction

Continuous Improvement

A/B Testing Framework:

- Response Variations: Testing different AI-generated responses

- Voice Selection: Optimizing voice characteristics for different customer segments

- Conversation Flows: Improving dialogue patterns and interaction design

- Integration Performance: Testing different backend system configurations

Machine Learning Feedback Loops:

- Conversation Outcomes: Training models based on successful interactions

- Error Analysis: Identifying and correcting common failure patterns

- Performance Optimization: Automatically tuning system parameters

- Predictive Analytics: Anticipating customer needs and system requirements

Future Trends and Innovations

The field of AI voice agents is rapidly evolving, with several emerging trends that will shape the next generation of conversational AI systems.

Multimodal AI Integration

Vision and Voice Combination:

- Visual Context: AI agents that can analyze shared images during voice calls

- Screen Sharing: Collaborative problem-solving with visual assistance

- Document Processing: Real-time analysis of customer-provided documents

- Video Calling: Enhanced communication through visual cues and expressions

Contextual Intelligence:

- Environmental Awareness: Understanding caller’s physical context

- Device Integration: Seamless handoffs between smartphones, computers, and IoT devices

- Biometric Authentication: Voice prints and other biometric identification

- Emotional Intelligence: Advanced emotion detection and appropriate response adaptation

Advanced Language Capabilities

Specialized Domain Models:

- Industry-Specific Training: AI agents trained on specialized knowledge bases

- Technical Documentation: Deep integration with product manuals and knowledge systems

- Regulatory Compliance: Models that understand and apply industry regulations

- Cultural Adaptation: AI agents that adapt to local customs and communication styles

Improved Conversation Abilities:

- Long-term Memory: Maintaining context across weeks or months of interactions

- Personality Consistency: Stable, branded personality traits across all interactions

- Humor and Empathy: More human-like emotional responses and social intelligence

- Multilingual Fluency: Seamless code-switching and translation capabilities

Infrastructure Evolution

Edge AI Deployment:

- Local Processing: Reducing latency through on-device AI processing

- Privacy Enhancement: Keeping sensitive data local while maintaining AI capabilities

- Offline Functionality: Basic AI capabilities even without internet connectivity

- 5G Integration: Leveraging high-speed networks for enhanced mobile AI experiences

Quantum Computing Impact:

- Optimization Problems: Quantum algorithms for conversation flow optimization

- Security Enhancement: Quantum-resistant encryption for sensitive voice data

- Model Training: Accelerated training of large language models

- Complex Reasoning: Enhanced capability for multi-step problem solving

Conclusion

AI voice agents represent one of the most sophisticated applications of modern artificial intelligence technology. Their success depends on the seamless integration of multiple complex systems: speech recognition, natural language processing, knowledge management, and voice synthesis.

Key Technical Takeaways:

- Architecture Matters: The design of the underlying system architecture significantly impacts performance, scalability, and reliability.

- Latency is Critical: Every millisecond counts in creating natural conversational experiences. Optimization must occur at every layer of the stack.

- Context is King: Maintaining conversation context across multiple channels and interactions is essential for truly useful AI agents.

- Enterprise Requirements are Complex: Security, compliance, and integration requirements add significant complexity to deployment and operations.

- Continuous Optimization is Essential: AI voice agents require ongoing monitoring, analysis, and improvement to maintain high performance.

As the technology continues to evolve, we can expect AI voice agents to become even more sophisticated, handling increasingly complex business scenarios while providing more natural and helpful customer experiences.

The businesses that successfully deploy AI voice agents today will have a significant competitive advantage, offering 24/7 customer support, reduced operational costs, and enhanced customer satisfaction. Understanding the technical foundation of these systems is the first step toward successful implementation.

Ready to implement AI voice agents for your business? Contact TringTring.AI to explore how our enterprise-grade omnichannel AI platform can transform your customer communications.

Related Reading:

- Voice AI Model Comparison: GPT-4o vs Claude vs Gemini for Voice Applications

- Building Scalable Voice AI: From MVP to Enterprise

- Voice AI Security: Protecting Conversations in Enterprise Deployments

- Multi-Channel AI: Integrating WhatsApp, Voice, and Web Chat