Enterprise decision-makers face a deceptively simple question: which voice agent platform is right for scaling conversations at the speed and reliability customers now expect? On the surface, Bland AI vs Synthflow looks like a straight tool comparison. But under the hood, we’re talking about deeply technical systems—real-time audio streaming, low-latency inference, LLM-driven dialogue orchestration—where tradeoffs matter. A single architecture decision can ripple into business outcomes like call containment rates, customer satisfaction, and infrastructure spend.

In this post, we’ll break down the voice agent platform comparison in technical detail while staying grounded in business value. Think of this as a translator’s guide: what engineers talk about when they debate millisecond latency, and what that means for your customer experience KPIs.

The Core Question: What Makes Voice Agents Hard?

Human conversation is fast, fluid, and unforgiving. If a system hesitates for more than 500 milliseconds, users feel the lag as “robotic.” If transcription misses an accent or industry-specific term, the dialogue derails.

Here’s the technical crux:

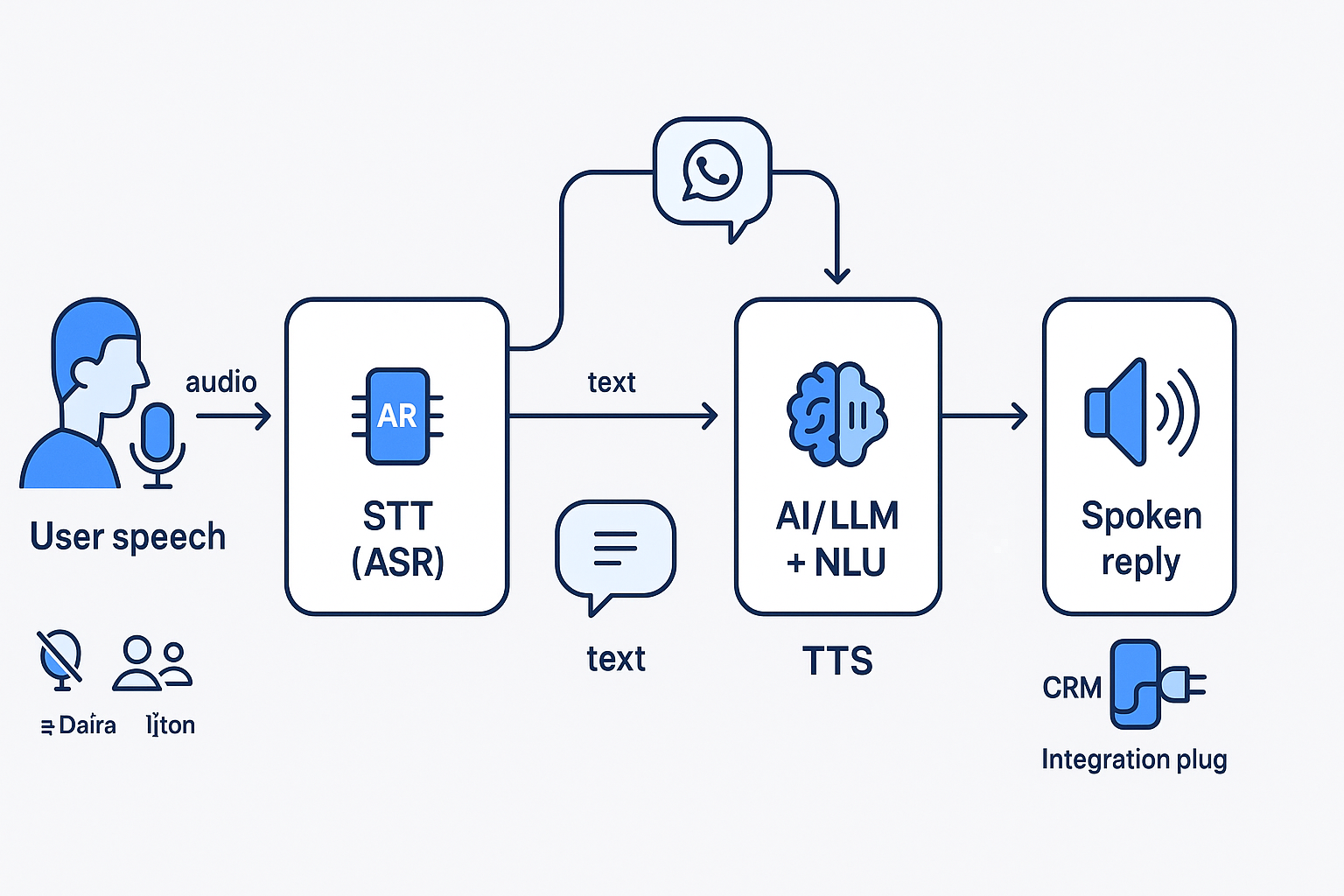

- Voice agents aren’t just LLMs bolted onto telephony.

- They require speech-to-text (STT), natural language understanding (NLU), and text-to-speech (TTS) stitched together in a pipeline.

- Every stage introduces potential latency and error.

Why this matters for business: high drop-off rates often trace back to micro-latency or accuracy issues that look invisible on a slide deck but are glaring in live calls.

Architecture Comparison: Bland AI vs Synthflow

Both platforms aim to solve the same fundamental problem—automating human-like conversations—but they approach architecture differently.

Bland AI

Technically speaking, Bland AI is optimized for latency-first performance. It prioritizes rapid inference by using distributed edge nodes. This reduces the audio round-trip time.

- Latency Benchmarks: Sub-300ms average response (critical because >500ms is perceptible to users).

- Architecture: Edge inference nodes plus centralized LLM orchestration.

- Strengths: Consistent response speed, robust call handling for global deployments.

- Tradeoffs: Limited flexibility in customizing dialogue flows—tuning requires engineering support.

Business translation: Bland AI works best if your number-one priority is speed and scale across diverse geographies. If you’re a BPO handling millions of calls, shaving 200ms off latency could mean thousands of happier customers daily.

Synthflow

Synthflow, by contrast, emphasizes workflow and conversation design flexibility. Its architecture is more developer-centric, with modular APIs for STT, NLU, and TTS selection.

- Latency Benchmarks: 350–450ms average response depending on stack configuration.

- Architecture: API-first modular stack, allowing plug-and-play with third-party models.

- Strengths: Deep customization—choose your own STT or TTS engine, build conditional flows.

- Tradeoffs: Performance depends on your configuration. Misconfigured stacks can spike latency.

Business translation: Synthflow suits enterprises where control and customization outweigh raw speed. Financial services or regulated industries often prefer this model, where you need to embed custom compliance logic into flows.

Technical Deep Dive: Where the Differences Show

1. Speech-to-Text (STT) Accuracy

- Bland AI: Uses proprietary ASR (automatic speech recognition) tuned for contact-center data. Accuracy ~90–92% in noisy conditions.

- Synthflow: Lets you bring your own STT (e.g., Deepgram, Whisper). Accuracy can reach 94–95% in clean audio but varies by model.

Why it matters: 2–3% accuracy difference may sound small, but in practice it can mean tens of thousands of misinterpreted intents monthly for a large enterprise.

2. Latency Management

- Bland AI: Edge computing reduces latency by 30–40% versus cloud-only solutions.

- Synthflow: Dependent on chosen STT/TTS vendors—latency may climb if services aren’t regionally optimized.

“We architected for sub-300ms latency because research shows users perceive delays over 500ms as unnatural—that required edge computing with distributed inference.”

— Technical Architecture Brief

3. Integration Complexity

- Bland AI: Offers pre-built integrations with major CRMs and telephony providers. Limited ability to customize pipelines.

- Synthflow: Requires developer effort but provides granular APIs for custom integration.

Business tradeoff: Bland accelerates time-to-market, Synthflow offers long-term flexibility.

4. Real-Time Error Handling

- Bland AI: Automatic fallback scripts if STT fails mid-call.

- Synthflow: Developer must script fallback; more powerful, but more complex.

In practice: We’ve seen enterprises lose 5–10% of calls when fallback handling isn’t architected properly.

Real-World Example: Scaling with Latency in Mind

One enterprise processed 10 million calls annually. With a 400ms response system, average call length was 4.5 minutes. By migrating to a 250ms system, calls shortened to 4.2 minutes—a 7% time saving. That small improvement cut annual telephony costs by nearly $1.2M.

Lesson: Latency isn’t just a technical bragging point—it has measurable financial implications.

Platform Feature Comparison: Bland AI vs Synthflow

| Feature | Bland AI | Synthflow |

|---|---|---|

| Latency | 250–300ms avg | 350–450ms avg (config-dependent) |

| STT Accuracy | 90–92% (optimized in noisy data) | 92–95% (depends on chosen engine) |

| Integration Model | Pre-built connectors | API-first, developer-driven |

| Customization | Limited | High |

| Fallback Handling | Built-in | Customizable |

| Global Deployment | Edge inference nodes | Cloud-based, region dependent |

| Best For | High-volume, latency-sensitive ops | Regulated, customization-heavy ops |

Technical Requirements: What You Need to Know

When evaluating Bland Synthflow differences, it’s not just platform features—it’s your infrastructure reality.

- Latency Budget: Do you need sub-300ms, or can you tolerate 400–500ms? Map this to customer tolerance.

- Integration Depth: Pre-built connectors get you live faster; APIs future-proof your stack.

- Scalability: Edge deployment requires multi-region infrastructure; API-driven requires strong developer bandwidth.

- Compliance & Security: Bland’s packaged integrations simplify compliance; Synthflow gives more control to meet custom regulations.

- Cost Modeling: Faster latency can reduce average handling time (AHT), but high customization increases upfront engineering spend.

Conclusion: Choosing the Right Voice Agent Platform

Voice AI isn’t “solved” yet—latency, accuracy, and workflow orchestration remain active engineering challenges. Bland AI vs Synthflow isn’t about picking a “winner,” but aligning technical tradeoffs with business priorities.

- If latency at scale is your North Star, Bland AI delivers predictable performance.

- If custom workflows and control matter more, Synthflow offers flexibility—even if it comes with engineering overhead.

The real takeaway? Both platforms can be the best voice agent platform—depending on your context.

Ready to Dive Deeper?

Technical implementation varies by environment, stack, and customer use case. Want to get into the weeds for your setup? Our solutions architects offer free 30-minute technical consultations—we’ll review your current infrastructure, integration points, and latency tolerance.

Explore our full capabilities and bring your technical questions—we speak your language.