Why Ethics in Voice AI Isn’t Optional Anymore

Imagine calling your bank and being routed through a voice agent that misunderstands your accent, logs your data insecurely, and offers you a product you don’t qualify for. That’s not just a bad customer experience—it’s an ethical failure.

Voice AI is moving fast. By 2025, adoption rates have crossed 28% of enterprises with production-grade deployments. But with speed comes responsibility. Voice AI touches identity, emotion, and trust more directly than text-based systems. Which means bias, privacy, and responsible design aren’t just checkboxes—they’re strategic imperatives.

By the end of this piece, you’ll understand the key ethical challenges, the technical underpinnings, and the practical steps enterprises can take to deploy voice AI responsibly.

Bias in Voice Systems: Why It Happens and Why It Matters

Bias in AI often feels abstract until you see it in action. Voice systems trained primarily on U.S. English data, for example, routinely struggle with accents from India, Nigeria, or even regional U.K. dialects.

Why it happens:

- Training Data Imbalance – Overrepresentation of certain accents, genders, or age groups.

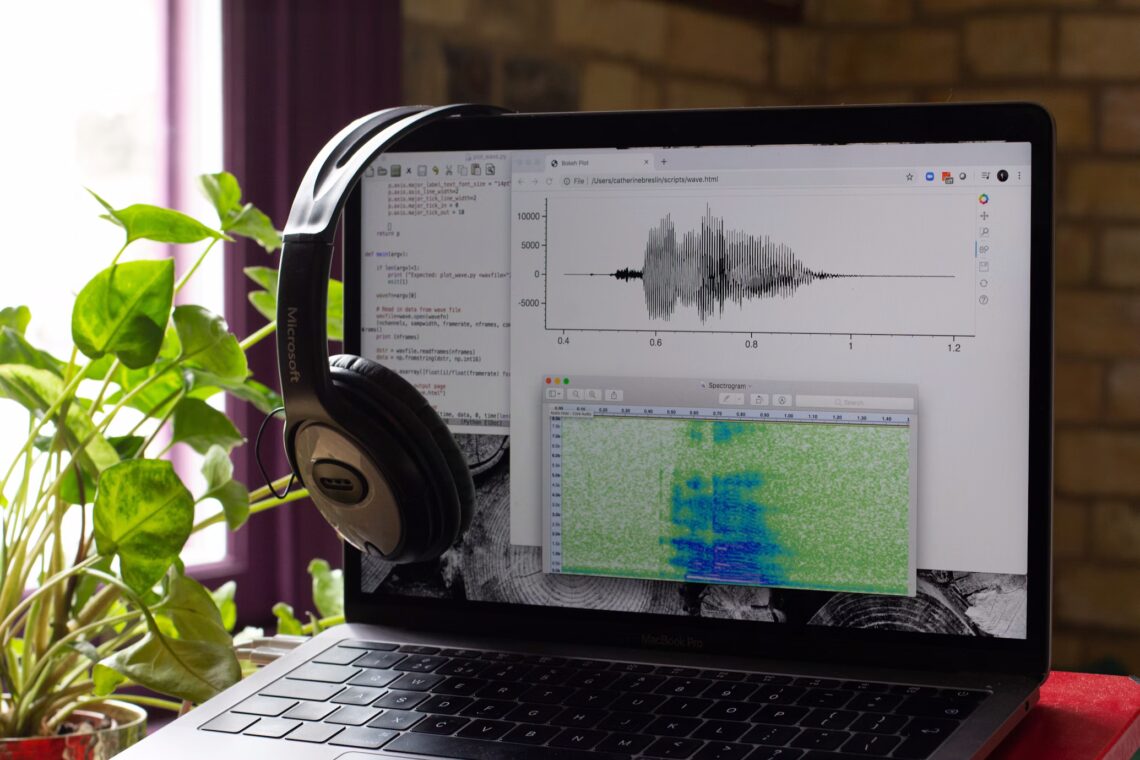

- Acoustic Environments – Models trained in clean lab settings underperform in noisy, real-world conditions.

- Labeling Subjectivity – Human annotators bring their own unconscious bias to the training process.

Why it matters:

- Fairness: Customers who aren’t understood drop out faster.

- Revenue: Enterprises lose sales when systems fail to recognize intent.

- Reputation: A biased system can generate PR disasters—“AI doesn’t understand women’s voices” is not a headline any brand wants.

“We tested our voice bot with 100 customers in five regions. Accuracy in the U.S. was 94%. In India, it dropped to 71%. That gap was unacceptable.”

— Head of CX, Global Retail

Privacy: Voice as Biometric Data

Voice isn’t just another data stream. It’s biometric. That makes it personally identifiable information (PII) under GDPR, HIPAA, and India’s DPDP Act.

Key privacy risks:

- Over-Collection: Capturing more voice data than needed.

- Cross-Border Transfers: Sending voice data to cloud servers outside local jurisdiction.

- Secondary Use: Using customer voice data to train unrelated models without consent.

In practice: responsible voice AI requires privacy-preserving architectures—like on-device inference for sensitive commands, anonymization of stored audio, and explicit opt-in mechanisms.

Quick aside: think of this like a medical check-up. You don’t need to share your entire health history for a flu shot—just the relevant context. Voice AI should collect only what’s necessary.

Responsible Development: Transparency and Accountability

Here’s the cool part—responsible voice AI isn’t just about avoiding fines. It’s a differentiator. Customers increasingly trust brands that explain how their AI works.

Principles for responsible development:

- Transparency: Make confidence scores and escalation logic visible to supervisors.

- Accountability: Track decision-making for audits—especially in regulated industries.

- Explainability: Provide plain-language explanations of why an AI made a decision.

Think of transparency like nutrition labels. Most people don’t read every detail, but the presence of the label builds trust.

The Framework for Ethical Voice AI

Let’s put it all together in a simple framework:

- Bias Mitigation – Diverse, representative training data; fairness testing across demographics.

- Privacy by Design – Limit collection, localize processing, secure storage.

- Transparency – Expose how the system works to users and supervisors.

- Accountability – Document decisions, enable audits.

- Continuous Monitoring – Bias and drift don’t disappear; they evolve.

Key Insight: Ethics isn’t a one-time project. It’s an ongoing process of tuning, monitoring, and auditing.

Putting This Into Practice: What This Means for Your Team

Here are the actionable takeaways for enterprises rolling out voice AI:

- Audit your data before deployment. If 90% of your training data comes from one region, expect bias.

- Segment pilots regionally. Don’t assume U.S. accuracy rates apply globally.

- Invest in privacy-first infrastructure. Edge inference and anonymization are no longer optional.

- Build transparency into dashboards. Supervisors should see confidence scores, not just “AI said so.”

- Create escalation pathways. AI that detects emotional stress should pass to humans automatically.

Why this matters: ethics isn’t charity work. It’s risk management, compliance alignment, and customer trust rolled into one.

Conclusion: Ethics as Strategy, Not Compliance

The mistake many enterprises make is treating voice AI ethics as a “compliance tax.” In reality, bias, privacy, and responsibility are where brand trust and ROI intersect.

Deploying voice AI responsibly won’t make headlines. But avoiding a scandal, preventing customer churn, and building long-term trust will pay back far more than the cost of “ethics work.”

Ready to explore how to embed ethical principles in your voice AI roadmap? We run 30-minute workshops where we walk your team through bias testing, privacy frameworks, and responsible development practices. [Learn by doing—book your session.]